Every time you run git merge, resolve a conflict in Google Docs, or watch your notes sync across devices in real-time, you're witnessing the magic of 3-way merge and vector clocks at work. These two concepts form the bedrock of modern distributed systems, enabling billions of devices to collaborate, synchronize, and maintain consistency without central coordination.

Yet, despite their ubiquity, these concepts remain poorly understood by most engineers. Today, we're going to change that.

The Problem: Distributed Systems Are Hard

Imagine you're building a collaborative text editor. Alice in New York edits line 5. Bob in Tokyo edits line 10. Carol in London deletes line 7. All three are working offline, and their changes need to merge seamlessly when they reconnect.

Timeline of Chaos:

Alice (NYC) Bob (Tokyo) Carol (London)

| | |

| [Edit L5] | |

| | [Edit L10] |

| | | [Delete L7]

| | |

└───────────────┴────────────────┘

|

How do we merge?

Without proper algorithms, you face:

- Lost updates - Someone's work gets silently overwritten

- False conflicts - Non-conflicting changes flagged as conflicts

- Inconsistent state - Different users see different "final" versions

- Causality violations - Effects appearing before their causes

This is where vector clocks and 3-way merge come to the rescue.

Part 1: Vector Clocks - Tracking Causality in Distributed Systems

What Are Vector Clocks?

A vector clock is a mechanism for tracking the partial ordering of events in a distributed system. Unlike wall-clock time (which can drift, skew, or be manipulated), vector clocks capture the causal relationship between events.

The key insight: In distributed systems, we don't need to know the exact time something happened. We need to know what happened before what.

The Fundamental Structure

A vector clock is simply a map from node IDs to logical timestamps:

VectorClock = {

node_A: 3,

node_B: 2,

node_C: 5

}

Each entry represents: "This is the latest event I know about from this node."

The Three Rules of Vector Clocks

Rule 1: Local Event → Increment

When a node performs a local operation, it increments its own counter:

def local_event(node_id, vector_clock):

vector_clock[node_id] = vector_clock.get(node_id, 0) + 1

return vector_clock

Rule 2: Send Message → Increment + Attach

When sending a message, increment your counter and attach the vector clock:

def send_message(node_id, vector_clock, message):

vector_clock[node_id] = vector_clock.get(node_id, 0) + 1

return Message(

payload=message,

vector_clock=vector_clock.copy()

)

Rule 3: Receive Message → Merge + Increment

When receiving a message, merge clocks (taking max of each entry) and increment:

def receive_message(node_id, local_clock, received_message):

received_clock = received_message.vector_clock

# Merge: take maximum of each component

merged = {}

all_nodes = set(local_clock.keys()) | set(received_clock.keys())

for node in all_nodes:

merged[node] = max(

local_clock.get(node, 0),

received_clock.get(node, 0)

)

# Increment own counter

merged[node_id] = merged.get(node_id, 0) + 1

return merged

Visual Example: Vector Clocks in Action

Let's trace through a real scenario with three nodes:

Node A Node B Node C

| | |

| {A:1} | |

|----[msg1]-------->| |

| | {A:1,B:1} |

| |----[msg2]-------->|

| | | {A:1,B:1,C:1}

| {A:2} | |

| | {A:1,B:2} |

| | |

|<-----------------[msg3]---------------|

| {A:3,B:1,C:1} | | {A:1,B:1,C:2}

| | |

Step-by-step breakdown:

- A performs local event:

{A:1} - A sends msg1 to B: A's clock stays

{A:1}, B receives and merges to{A:1,B:1} - B sends msg2 to C: C merges to

{A:1,B:1,C:1} - A performs another local event:

{A:2} - B performs local event:

{A:1,B:2} - C sends msg3 to A: A merges to

{A:3,B:1,C:1}(note: A takes max, so B stays at 1 since that's what C knew) - C performs local event:

{A:1,B:1,C:2}

Comparing Vector Clocks: The Power of Partial Ordering

Given two vector clocks V1 and V2, exactly one of four relationships holds:

def compare_vector_clocks(v1, v2):

all_nodes = set(v1.keys()) | set(v2.keys())

v1_greater = False

v2_greater = False

for node in all_nodes:

val1 = v1.get(node, 0)

val2 = v2.get(node, 0)

if val1 > val2:

v1_greater = True

elif val2 > val1:

v2_greater = True

if v1_greater and not v2_greater:

return "V1 HAPPENED_AFTER V2" # V1 > V2

elif v2_greater and not v1_greater:

return "V1 HAPPENED_BEFORE V2" # V1 < V2

elif not v1_greater and not v2_greater:

return "V1 EQUALS V2" # V1 == V2

else:

return "CONCURRENT" # V1 || V2 (conflict!)

The four relationships:

| Relationship | Meaning | Example |

|---|---|---|

V1 < V2 | V1 happened before V2 | {A:1,B:2} < {A:2,B:3} |

V1 > V2 | V1 happened after V2 | {A:3,B:3} > {A:2,B:2} |

V1 == V2 | Same event | {A:2,B:1} == {A:2,B:1} |

V1 || V2 | Concurrent (conflict!) | {A:2,B:1} || {A:1,B:2} |

The magic: When clocks are concurrent, we've detected a potential conflict that needs resolution!

Why Not Just Use Timestamps?

Wall-clock time fails in distributed systems for several reasons:

The Timestamp Problem:

Server A (Clock: 10:00:00.000) Server B (Clock: 10:00:00.050)

| |

[Write X=1] [Write X=2]

T=10:00:00.001 T=10:00:00.002

| |

└──────────── Which wins? ───────────┘

Problem: Server B's clock is 50ms ahead!

Reality: A's write might have happened AFTER B's write

but appears to have happened before.

Issues with wall-clock time:

- Clock drift: Hardware clocks drift ~10-100 ppm

- Clock skew: Different machines have different times

- NTP corrections: Time can jump forward or backward

- Leap seconds: Time isn't monotonic

- No causality: Timestamps don't capture "happened-before"

Vector clocks solve all of this by tracking logical time based on observed events.

Part 2: 3-Way Merge - The Art of Conflict Resolution

What Is 3-Way Merge?

A 3-way merge is an algorithm that combines two divergent versions of something by comparing them against their common ancestor. Unlike a 2-way merge (which can only compare two versions), 3-way merge can determine what actually changed and who changed it.

[Common Ancestor]

|

┌─────────┴─────────┐

| |

v v

[Version A] [Version B]

| |

└─────────┬─────────┘

|

v

[Merged Result]

Why "3-Way"?

The three "ways" refer to the three inputs:

- Base (Ancestor): The original version before divergence

- Ours (Local): Our modified version

- Theirs (Remote): Their modified version

The 3-Way Merge Algorithm

Here's the core logic:

def three_way_merge(base, ours, theirs):

"""

Merge two divergent versions using their common ancestor.

Returns: (merged_result, conflicts)

"""

result = []

conflicts = []

# For each element/line/chunk:

for i in range(max(len(base), len(ours), len(theirs))):

base_val = base[i] if i < len(base) else None

our_val = ours[i] if i < len(ours) else None

their_val = theirs[i] if i < len(theirs) else None

if our_val == their_val:

# Both made same change (or no change) - easy!

result.append(our_val)

elif our_val == base_val:

# We didn't change it, they did - take theirs

result.append(their_val)

elif their_val == base_val:

# They didn't change it, we did - take ours

result.append(our_val)

else:

# Both changed differently from base - CONFLICT!

conflicts.append({

'position': i,

'base': base_val,

'ours': our_val,

'theirs': their_val

})

result.append(f"<<<CONFLICT at {i}>>>")

return result, conflicts

Visual Example: 3-Way Merge in Action

Let's merge two versions of a configuration file:

# BASE (Common Ancestor)

database:

host: localhost

port: 5432

pool_size: 10

cache:

enabled: true

ttl: 3600

# OURS (Local Changes)

database:

host: localhost

port: 5432

pool_size: 20 # Changed: 10 → 20

cache:

enabled: true

ttl: 3600

logging: # Added new section

level: INFO

# THEIRS (Remote Changes)

database:

host: db.prod.com # Changed: localhost → db.prod.com

port: 5432

pool_size: 10

cache:

enabled: false # Changed: true → false

ttl: 3600

3-Way Merge Analysis:

| Field | Base | Ours | Theirs | Result | Reasoning |

|---|---|---|---|---|---|

database.host | localhost | localhost | db.prod.com | db.prod.com | We didn't change, they did |

database.port | 5432 | 5432 | 5432 | 5432 | Nobody changed |

database.pool_size | 10 | 20 | 10 | 20 | We changed, they didn't |

cache.enabled | true | true | false | false | We didn't change, they did |

cache.ttl | 3600 | 3600 | 3600 | 3600 | Nobody changed |

logging | ∅ | {level:INFO} | ∅ | {level:INFO} | We added, they didn't |

Merged Result:

database:

host: db.prod.com # From theirs

port: 5432

pool_size: 20 # From ours

cache:

enabled: false # From theirs

ttl: 3600

logging: # From ours

level: INFO

No conflicts! The 3-way merge algorithm automatically resolved everything because changes didn't overlap.

When Conflicts Occur

Conflicts happen when both sides modified the same thing differently:

# BASE

timeout: 30

# OURS

timeout: 60 # We increased it

# THEIRS

timeout: 15 # They decreased it

Result: CONFLICT!

<<<<<<< OURS

timeout: 60

=======

timeout: 15

>>>>>>> THEIRS

The algorithm cannot automatically decide which value is correct. A human (or application-specific logic) must resolve it.

The Power of Having a Common Ancestor

Without the base (2-way merge), we'd have many more "false conflicts":

2-Way Merge (No Base):

OURS: timeout: 60

THEIRS: timeout: 60

Are these the same because:

a) Neither changed it (it was always 60)

b) Both independently changed it to 60

c) One changed it, one didn't

We can't tell! Must flag as potential conflict.

3-Way Merge (With Base):

BASE: timeout: 30

OURS: timeout: 60

THEIRS: timeout: 60

Now we know: Both changed 30 → 60

Result: Take 60, no conflict (convergent change)

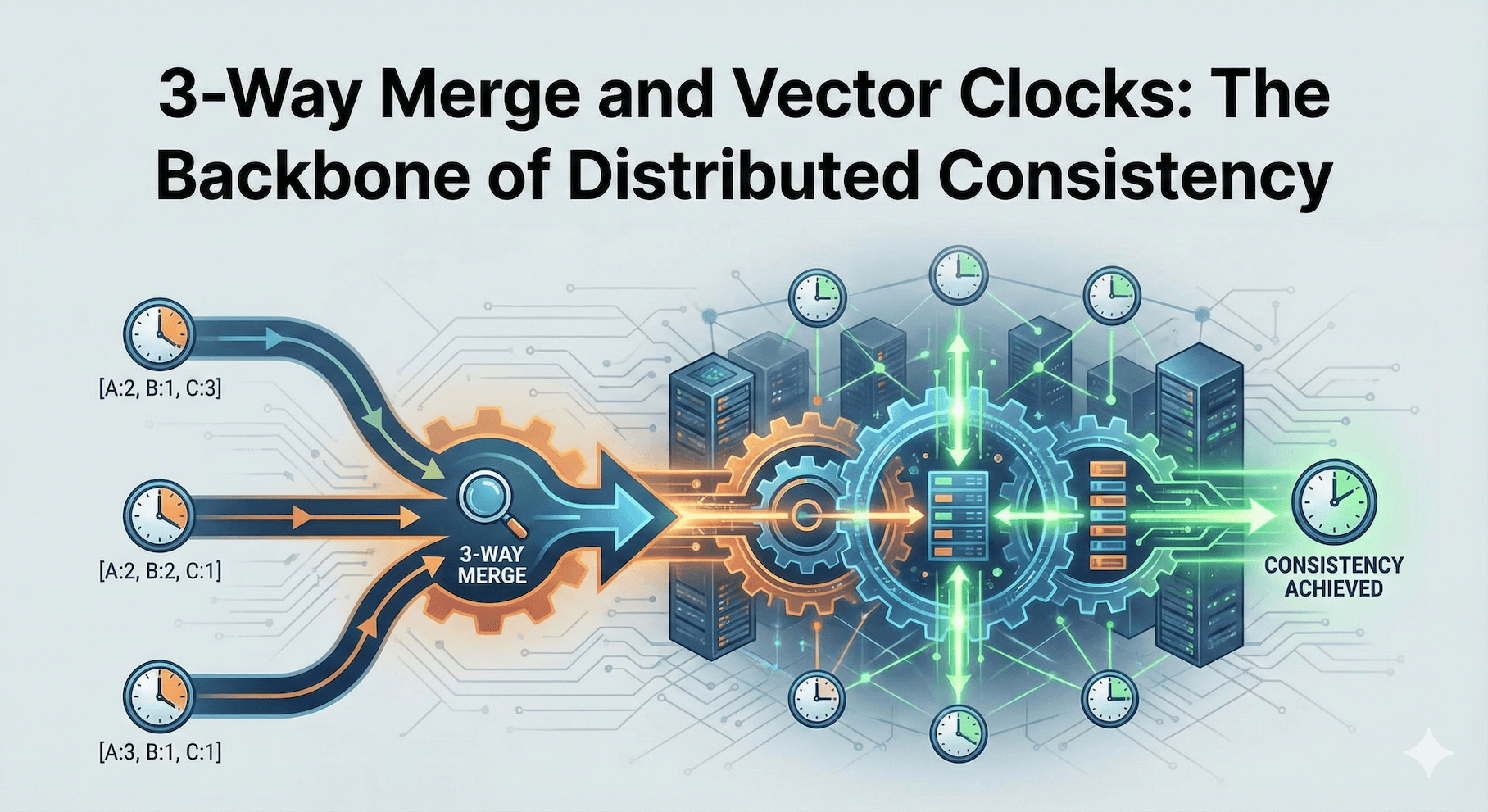

Part 3: Vector Clocks + 3-Way Merge = Distributed Consistency

The Marriage of Two Concepts

Vector clocks and 3-way merge work together beautifully:

- Vector clocks identify WHEN conflicts occur (concurrent modifications)

- 3-way merge resolves HOW to merge (using common ancestor)

┌─────────────────────────────────────────────────────────────┐

│ DISTRIBUTED MERGE FLOW │

├─────────────────────────────────────────────────────────────┤

│ │

│ [Event A] [Event B] │

│ VC: {A:3,B:2} VC: {A:2,B:4} │

│ │ │ │

│ └──────────┬───────────┘ │

│ │ │

│ Compare Vector Clocks │

│ │ │

│ ┌────────┴────────┐ │

│ │ │ │

│ [Ordered] [Concurrent] │

│ │ │ │

│ Take later Find LCA using │

│ version version DAG │

│ │ │ │

│ │ 3-Way Merge │

│ │ / | \ │

│ │ Base Ours Theirs │

│ │ \ | / │

│ └──────────┬────┴────┬────────────────────────── │

│ │ │ │

│ [No Conflict] [Conflict] │

│ │ │ │

│ Auto-merge Manual/ │

│ App-specific │

│ resolution │

└─────────────────────────────────────────────────────────────┘

Finding the Lowest Common Ancestor (LCA)

For 3-way merge to work, we need to find the common ancestor. In a version DAG (Directed Acyclic Graph), this is the Lowest Common Ancestor (LCA):

def find_lca(version_a, version_b, version_graph):

"""

Find the Lowest Common Ancestor of two versions.

The version_graph maps each version to its parent(s).

"""

# Get all ancestors of A

ancestors_a = set()

queue = [version_a]

while queue:

current = queue.pop(0)

if current in ancestors_a:

continue

ancestors_a.add(current)

queue.extend(version_graph.get(current, {}).get('parents', []))

# BFS from B to find first common ancestor

queue = [version_b]

visited = set()

while queue:

current = queue.pop(0)

if current in visited:

continue

visited.add(current)

if current in ancestors_a:

return current # Found LCA!

queue.extend(version_graph.get(current, {}).get('parents', []))

return None # No common ancestor (shouldn't happen in well-formed DAG)

Real Implementation: A Distributed Key-Value Store

Let's build a simple distributed KV store that uses both concepts:

import hashlib

import json

from dataclasses import dataclass, field

from typing import Dict, List, Optional, Any

from enum import Enum

class ConflictResolution(Enum):

LAST_WRITE_WINS = "lww"

FIRST_WRITE_WINS = "fww"

MANUAL = "manual"

MERGE_VALUES = "merge"

@dataclass

class Version:

"""A versioned value with vector clock."""

value: Any

vector_clock: Dict[str, int]

node_id: str

timestamp: float # Wall clock for LWW fallback

def to_hash(self) -> str:

"""Generate unique hash for this version."""

content = json.dumps({

'value': self.value,

'vc': sorted(self.vector_clock.items())

}, sort_keys=True)

return hashlib.sha256(content.encode()).hexdigest()[:12]

@dataclass

class VersionedValue:

"""A value that may have multiple concurrent versions."""

key: str

versions: List[Version] = field(default_factory=list)

def add_version(self, version: Version):

"""Add a new version, pruning dominated versions."""

dominated = []

dominated_by_new = False

for existing in self.versions:

cmp = compare_vector_clocks(existing.vector_clock, version.vector_clock)

if cmp == "HAPPENED_BEFORE":

# Existing is older, mark for removal

dominated.append(existing)

elif cmp == "HAPPENED_AFTER":

# New version is older, don't add it

dominated_by_new = True

break

elif cmp == "EQUALS":

# Same version, skip

dominated_by_new = True

break

# If CONCURRENT, keep both

if not dominated_by_new:

# Remove dominated versions

self.versions = [v for v in self.versions if v not in dominated]

self.versions.append(version)

def has_conflicts(self) -> bool:

"""Check if there are concurrent versions (conflicts)."""

return len(self.versions) > 1

def get_value(self, resolution: ConflictResolution = ConflictResolution.LAST_WRITE_WINS):

"""Get the value, resolving conflicts if necessary."""

if not self.versions:

return None

if len(self.versions) == 1:

return self.versions[0].value

# Multiple versions - need to resolve

if resolution == ConflictResolution.LAST_WRITE_WINS:

return max(self.versions, key=lambda v: v.timestamp).value

elif resolution == ConflictResolution.FIRST_WRITE_WINS:

return min(self.versions, key=lambda v: v.timestamp).value

elif resolution == ConflictResolution.MANUAL:

raise ConflictError(f"Key {self.key} has conflicts", self.versions)

elif resolution == ConflictResolution.MERGE_VALUES:

return self._merge_values()

def _merge_values(self) -> Any:

"""Attempt automatic merge of conflicting values."""

# For sets/lists, take union

if all(isinstance(v.value, (list, set)) for v in self.versions):

merged = set()

for v in self.versions:

merged.update(v.value)

return list(merged)

# For dicts, do 3-way merge

if all(isinstance(v.value, dict) for v in self.versions):

return self._three_way_dict_merge()

# Can't auto-merge, fall back to LWW

return max(self.versions, key=lambda v: v.timestamp).value

def _three_way_dict_merge(self) -> dict:

"""

Perform 3-way merge on dictionary values.

Uses empty dict as base if no common ancestor available.

"""

# Simplified: use empty dict as base

# In real implementation, would find LCA

base = {}

# Start with base

result = base.copy()

conflicts = {}

all_keys = set()

for v in self.versions:

all_keys.update(v.value.keys())

for key in all_keys:

values = []

for v in self.versions:

values.append(v.value.get(key))

unique_values = list(set(str(v) for v in values))

if len(unique_values) == 1:

# All agree

result[key] = self.versions[0].value.get(key)

else:

# Conflict on this key - take most recent

latest = max(self.versions, key=lambda v: v.timestamp)

result[key] = latest.value.get(key)

conflicts[key] = values

return result

class ConflictError(Exception):

"""Raised when manual conflict resolution is required."""

def __init__(self, message, versions):

super().__init__(message)

self.versions = versions

def compare_vector_clocks(v1: Dict[str, int], v2: Dict[str, int]) -> str:

"""Compare two vector clocks and return their relationship."""

all_nodes = set(v1.keys()) | set(v2.keys())

v1_greater = False

v2_greater = False

for node in all_nodes:

val1 = v1.get(node, 0)

val2 = v2.get(node, 0)

if val1 > val2:

v1_greater = True

elif val2 > val1:

v2_greater = True

if v1_greater and not v2_greater:

return "HAPPENED_AFTER"

elif v2_greater and not v1_greater:

return "HAPPENED_BEFORE"

elif not v1_greater and not v2_greater:

return "EQUALS"

else:

return "CONCURRENT"

class DistributedKVStore:

"""A distributed key-value store using vector clocks."""

def __init__(self, node_id: str):

self.node_id = node_id

self.vector_clock: Dict[str, int] = {}

self.data: Dict[str, VersionedValue] = {}

def _increment_clock(self):

"""Increment this node's logical clock."""

self.vector_clock[self.node_id] = self.vector_clock.get(self.node_id, 0) + 1

def _merge_clock(self, other_clock: Dict[str, int]):

"""Merge another vector clock into ours."""

all_nodes = set(self.vector_clock.keys()) | set(other_clock.keys())

for node in all_nodes:

self.vector_clock[node] = max(

self.vector_clock.get(node, 0),

other_clock.get(node, 0)

)

def put(self, key: str, value: Any, context: Optional[Dict[str, int]] = None):

"""

Write a value with optional causal context.

If context is provided, it represents the version being updated.

"""

import time

# Merge context if provided (read-your-writes)

if context:

self._merge_clock(context)

self._increment_clock()

version = Version(

value=value,

vector_clock=self.vector_clock.copy(),

node_id=self.node_id,

timestamp=time.time()

)

if key not in self.data:

self.data[key] = VersionedValue(key=key)

self.data[key].add_version(version)

return self.vector_clock.copy()

def get(self, key: str, resolution: ConflictResolution = ConflictResolution.LAST_WRITE_WINS):

"""

Read a value with conflict resolution strategy.

Returns (value, context, has_conflicts)

"""

if key not in self.data:

return None, {}, False

vv = self.data[key]

value = vv.get_value(resolution)

# Build context from all versions' clocks

context = {}

for version in vv.versions:

for node, clock in version.vector_clock.items():

context[node] = max(context.get(node, 0), clock)

return value, context, vv.has_conflicts()

def sync_from(self, other: 'DistributedKVStore'):

"""Sync data from another node."""

self._merge_clock(other.vector_clock)

for key, other_vv in other.data.items():

if key not in self.data:

self.data[key] = VersionedValue(key=key)

for version in other_vv.versions:

self.data[key].add_version(version)

self._increment_clock()

# Demo usage

def demo():

"""Demonstrate vector clocks and conflict resolution."""

# Create three nodes

node_a = DistributedKVStore("node_a")

node_b = DistributedKVStore("node_b")

node_c = DistributedKVStore("node_c")

print("=" * 60)

print("DISTRIBUTED KV STORE DEMO")

print("=" * 60)

# Scenario 1: Sequential updates (no conflict)

print("\n--- Scenario 1: Sequential Updates ---")

ctx = node_a.put("user:1", {"name": "Alice", "age": 30})

print(f"Node A writes user:1 -> Context: {ctx}")

# Sync to B

node_b.sync_from(node_a)

# B updates with context

value, ctx, _ = node_b.get("user:1")

ctx = node_b.put("user:1", {"name": "Alice", "age": 31}, context=ctx)

print(f"Node B updates user:1 (age: 31) -> Context: {ctx}")

# Sync back to A

node_a.sync_from(node_b)

value, ctx, conflicts = node_a.get("user:1")

print(f"Node A reads user:1 -> {value}, Conflicts: {conflicts}")

# Scenario 2: Concurrent updates (conflict!)

print("\n--- Scenario 2: Concurrent Updates (Conflict) ---")

# Reset

node_a = DistributedKVStore("node_a")

node_b = DistributedKVStore("node_b")

# A writes

node_a.put("config", {"timeout": 30})

# B writes independently (no sync)

node_b.put("config", {"timeout": 60})

print(f"Node A writes config.timeout=30")

print(f"Node B writes config.timeout=60 (independently)")

# Now sync

node_a.sync_from(node_b)

value, ctx, conflicts = node_a.get("config")

print(f"After sync - Value: {value}, Has Conflicts: {conflicts}")

print(f"Node A's versions: {len(node_a.data['config'].versions)}")

# Scenario 3: Conflict resolution with merge

print("\n--- Scenario 3: Dict Merge Resolution ---")

node_a = DistributedKVStore("node_a")

node_b = DistributedKVStore("node_b")

# A writes some fields

node_a.put("settings", {"theme": "dark", "lang": "en"})

# B writes different fields

node_b.put("settings", {"timezone": "UTC", "lang": "en"})

node_a.sync_from(node_b)

value, _, conflicts = node_a.get("settings", ConflictResolution.MERGE_VALUES)

print(f"Merged settings: {value}")

print(f"(Combined fields from both concurrent writes)")

if __name__ == "__main__":

demo()

Output:

============================================================

DISTRIBUTED KV STORE DEMO

============================================================

--- Scenario 1: Sequential Updates ---

Node A writes user:1 -> Context: {'node_a': 1}

Node B updates user:1 (age: 31) -> Context: {'node_a': 1, 'node_b': 2}

Node A reads user:1 -> {'name': 'Alice', 'age': 31}, Conflicts: False

--- Scenario 2: Concurrent Updates (Conflict) ---

Node A writes config.timeout=30

Node B writes config.timeout=60 (independently)

After sync - Value: 60, Has Conflicts: True

Node A's versions: 2

--- Scenario 3: Dict Merge Resolution ---

Merged settings: {'theme': 'dark', 'lang': 'en', 'timezone': 'UTC'}

(Combined fields from both concurrent writes)

Part 4: Real-World Applications

1. Git: The 3-Way Merge Master

Git is perhaps the most famous user of 3-way merge. Every git merge operation:

# What happens when you run:

git merge feature-branch

# Git performs:

# 1. Find LCA (merge-base) of HEAD and feature-branch

# 2. 3-way merge: (merge-base, HEAD, feature-branch)

# 3. Auto-resolve non-conflicting changes

# 4. Mark conflicts for manual resolution

Git's merge-base algorithm:

# Find the common ancestor

$ git merge-base main feature

a1b2c3d4e5f6...

# View the three versions

$ git show a1b2c3d:file.txt # Base

$ git show main:file.txt # Ours

$ git show feature:file.txt # Theirs

Git internally uses a variant of vector clocks through its DAG of commits, where each commit references its parent(s), creating a partial order.

2. Amazon Dynamo / Apache Cassandra: Vector Clocks at Scale

Amazon's Dynamo (and its open-source successors like Cassandra and Riak) use vector clocks for conflict detection:

Dynamo Write Flow:

Client Coordinator Replicas (N=3)

| | |

|--- PUT(key,value) --->| |

| |--- Write + VC --------->| R1

| |--- Write + VC --------->| R2

| |--- Write + VC --------->| R3

| | |

| |<-- ACK (W=2) -----------|

|<-- Context (VC) ------| |

| | |

Read Repair:

|--- GET(key) --------->| |

| |--- Read --------------->| R1: VC={A:1}

| |--- Read --------------->| R2: VC={A:1}

| |--- Read --------------->| R3: VC={B:1} ← Stale!

| | |

| | Compare VCs: |

| | R1,R2 > R3 → Repair R3 |

| | |

|<-- Value + VC --------| |

Cassandra's approach: Uses timestamps (not vector clocks) for simplicity, with Last-Write-Wins semantics. Trade-off: simpler but can lose concurrent writes.

Riak's approach: Full vector clocks with sibling resolution (returns all concurrent values to client).

3. Google Docs / Operational Transformation

Google Docs uses Operational Transformation (OT) which shares principles with 3-way merge:

Alice types "Hello" at position 0

Bob types "World" at position 0 (concurrently)

Without transformation:

Alice sees: "HelloWorld"

Bob sees: "WorldHello"

❌ Inconsistent!

With OT:

Transform Bob's operation based on Alice's:

Bob's insert(0, "World") → insert(5, "World")

Both see: "HelloWorld"

✓ Consistent!

Modern alternatives like CRDTs (Conflict-free Replicated Data Types) achieve similar results with different trade-offs.

4. CRDTs: Merge Without Coordination

CRDTs are data structures designed to always merge without conflicts:

# G-Counter (Grow-only Counter) - A simple CRDT

class GCounter:

"""

A CRDT counter that only grows.

Uses vector clock-like structure internally.

"""

def __init__(self, node_id: str):

self.node_id = node_id

self.counts: Dict[str, int] = {}

def increment(self):

self.counts[self.node_id] = self.counts.get(self.node_id, 0) + 1

def value(self) -> int:

return sum(self.counts.values())

def merge(self, other: 'GCounter'):

"""Merge is always conflict-free!"""

all_nodes = set(self.counts.keys()) | set(other.counts.keys())

for node in all_nodes:

self.counts[node] = max(

self.counts.get(node, 0),

other.counts.get(node, 0)

)

# LWW-Register (Last-Writer-Wins Register)

@dataclass

class LWWRegister:

"""

A register where concurrent writes resolve via timestamp.

"""

value: Any = None

timestamp: float = 0.0

def set(self, value: Any, timestamp: float):

if timestamp > self.timestamp:

self.value = value

self.timestamp = timestamp

def merge(self, other: 'LWWRegister'):

if other.timestamp > self.timestamp:

self.value = other.value

self.timestamp = other.timestamp

# OR-Set (Observed-Remove Set) - Add/Remove without conflicts

class ORSet:

"""

A set CRDT that supports both add and remove.

Each element tagged with unique ID to handle add/remove conflicts.

"""

def __init__(self):

self.elements: Dict[Any, Set[str]] = {} # value -> set of unique tags

self.tombstones: Dict[Any, Set[str]] = {} # removed tags

def add(self, element: Any):

import uuid

tag = str(uuid.uuid4())

if element not in self.elements:

self.elements[element] = set()

self.elements[element].add(tag)

def remove(self, element: Any):

if element in self.elements:

# Move all tags to tombstones

if element not in self.tombstones:

self.tombstones[element] = set()

self.tombstones[element].update(self.elements[element])

self.elements[element] = set()

def contains(self, element: Any) -> bool:

if element not in self.elements:

return False

# Element exists if it has tags not in tombstones

active_tags = self.elements[element] - self.tombstones.get(element, set())

return len(active_tags) > 0

def merge(self, other: 'ORSet'):

# Merge elements (union of tags)

for elem, tags in other.elements.items():

if elem not in self.elements:

self.elements[elem] = set()

self.elements[elem].update(tags)

# Merge tombstones (union)

for elem, tags in other.tombstones.items():

if elem not in self.tombstones:

self.tombstones[elem] = set()

self.tombstones[elem].update(tags)

5. Distributed Databases Comparison

| Database | Conflict Detection | Conflict Resolution | Trade-off |

|---|---|---|---|

| Riak | Vector Clocks | Siblings (client resolves) | Most flexible, complex client |

| Cassandra | Timestamps | Last-Write-Wins | Simple, can lose data |

| CockroachDB | Hybrid Logical Clocks | Serializable isolation | Strong consistency, higher latency |

| DynamoDB | Conditional writes | Optimistic locking | Simple, requires retries |

| MongoDB | Oplog timestamps | Last-Write-Wins per doc | Simple, eventual consistency |

| Spanner | TrueTime (atomic clocks) | Global serialization | Strongest, requires special hardware |

Part 5: Advanced Topics

Hybrid Logical Clocks (HLC)

Vector clocks have a problem: they grow with the number of nodes. Hybrid Logical Clocks combine physical time with logical counters:

@dataclass

class HybridLogicalClock:

"""

Combines wall-clock time with logical counter.

Provides bounded size while maintaining causality.

"""

wall_time: int # Physical timestamp (milliseconds)

logical: int # Logical counter for same wall_time

def __init__(self):

self.wall_time = self._now()

self.logical = 0

def _now(self) -> int:

import time

return int(time.time() * 1000)

def tick(self) -> 'HybridLogicalClock':

"""Local event."""

now = self._now()

if now > self.wall_time:

self.wall_time = now

self.logical = 0

else:

self.logical += 1

return self

def receive(self, other: 'HybridLogicalClock') -> 'HybridLogicalClock':

"""Receive event from another node."""

now = self._now()

if now > self.wall_time and now > other.wall_time:

self.wall_time = now

self.logical = 0

elif self.wall_time == other.wall_time:

self.logical = max(self.logical, other.logical) + 1

elif self.wall_time > other.wall_time:

self.logical += 1

else:

self.wall_time = other.wall_time

self.logical = other.logical + 1

return self

def __lt__(self, other: 'HybridLogicalClock') -> bool:

if self.wall_time != other.wall_time:

return self.wall_time < other.wall_time

return self.logical < other.logical

def __repr__(self):

return f"HLC({self.wall_time}.{self.logical})"

Benefits of HLC:

- Fixed size (just 2 numbers) vs growing vector clocks

- Bounded drift from real time

- Still captures causality

- Used by CockroachDB, MongoDB, and others

Dotted Version Vectors

An optimization over vector clocks that reduces sibling explosion:

@dataclass

class DottedVersionVector:

"""

Optimized version vector that tracks:

- version_vector: What we've seen from each node

- dot: The specific (node, counter) that created this value

"""

version_vector: Dict[str, int]

dot: tuple # (node_id, counter)

def dominates(self, other: 'DottedVersionVector') -> bool:

"""Check if this DVV dominates (is newer than) another."""

other_node, other_counter = other.dot

return self.version_vector.get(other_node, 0) >= other_counter

def concurrent_with(self, other: 'DottedVersionVector') -> bool:

"""Check if two DVVs are concurrent (neither dominates)."""

return not self.dominates(other) and not other.dominates(self)

Interval Tree Clocks

For highly dynamic systems where nodes join and leave frequently:

Traditional Vector Clock problem:

- 1000 nodes = 1000 entries in every clock

- Nodes that leave still take up space

Interval Tree Clocks solution:

- Use intervals [0,1] instead of discrete node IDs

- Nodes "own" portions of the interval

- Can split/join without coordination

- Space proportional to active nodes only

Part 6: Implementation Patterns and Best Practices

Pattern 1: Read-Your-Writes with Vector Clocks

class SessionConsistentClient:

"""

Client that ensures read-your-writes consistency

by tracking vector clock context.

"""

def __init__(self, store: DistributedKVStore):

self.store = store

self.session_context: Dict[str, int] = {}

def write(self, key: str, value: Any):

# Include session context to ensure causality

new_context = self.store.put(key, value, context=self.session_context)

# Update session context

self._merge_context(new_context)

def read(self, key: str) -> Any:

value, context, _ = self.store.get(key)

# Update session context

self._merge_context(context)

return value

def _merge_context(self, new_context: Dict[str, int]):

for node, clock in new_context.items():

self.session_context[node] = max(

self.session_context.get(node, 0),

clock

)

Pattern 2: Crujdt Resolution Strategies

class ConflictResolver:

"""Pluggable conflict resolution strategies."""

@staticmethod

def last_write_wins(versions: List[Version]) -> Version:

return max(versions, key=lambda v: v.timestamp)

@staticmethod

def first_write_wins(versions: List[Version]) -> Version:

return min(versions, key=lambda v: v.timestamp)

@staticmethod

def highest_value(versions: List[Version]) -> Version:

"""For numeric values, take the highest."""

return max(versions, key=lambda v: v.value)

@staticmethod

def merge_sets(versions: List[Version]) -> Any:

"""For set values, take union."""

result = set()

for v in versions:

if isinstance(v.value, (list, set)):

result.update(v.value)

return result

@staticmethod

def application_specific(versions: List[Version], resolver_fn) -> Any:

"""Custom application logic."""

return resolver_fn(versions)

Pattern 3: Merkle Trees for Efficient Sync

class MerkleTree:

"""

Hash tree for efficient comparison of large datasets.

Used by Dynamo, Cassandra, etc. for anti-entropy.

"""

def __init__(self, data: Dict[str, Any]):

self.leaves = {}

self.root = self._build(data)

def _hash(self, content: str) -> str:

return hashlib.sha256(content.encode()).hexdigest()[:16]

def _build(self, data: Dict[str, Any]) -> str:

if not data:

return self._hash("")

# Sort keys for deterministic ordering

sorted_keys = sorted(data.keys())

# Hash each key-value pair

for key in sorted_keys:

value_hash = self._hash(str(data[key]))

self.leaves[key] = value_hash

# Build tree bottom-up

level = list(self.leaves.values())

while len(level) > 1:

next_level = []

for i in range(0, len(level), 2):

left = level[i]

right = level[i + 1] if i + 1 < len(level) else ""

next_level.append(self._hash(left + right))

level = next_level

return level[0] if level else self._hash("")

def diff(self, other: 'MerkleTree') -> List[str]:

"""Find keys that differ between two trees."""

if self.root == other.root:

return [] # Identical!

# Find differing leaves

diffs = []

all_keys = set(self.leaves.keys()) | set(other.leaves.keys())

for key in all_keys:

if self.leaves.get(key) != other.leaves.get(key):

diffs.append(key)

return diffs

Key Takeaways

When to Use Vector Clocks

✅ Use vector clocks when:

- You need to detect concurrent modifications

- Causality matters (e.g., "reply must come after message")

- You want flexibility in conflict resolution

- System has bounded number of writers

❌ Avoid vector clocks when:

- Simple last-write-wins is acceptable

- System has unbounded/dynamic nodes (use HLC instead)

- Strong consistency is required (use consensus protocols)

When to Use 3-Way Merge

✅ Use 3-way merge when:

- You can identify a common ancestor

- Content is structured (text lines, config fields, objects)

- Auto-resolution of non-conflicting changes is valuable

- Users can handle manual conflict resolution

❌ Avoid 3-way merge when:

- No common ancestor exists

- Content is opaque binary data

- Real-time collaboration required (use OT/CRDT instead)

Design Principles

- Capture causality, not wall-clock time - Vector clocks tell you what happened before what

- Design for conflict resolution - Conflicts will happen; plan for them

- Use the right tool - LWW for simplicity, vector clocks for correctness, CRDTs for automatic merge

- Keep context - Pass version context through read/write cycles

- Bound your metadata - Vector clocks grow; consider HLC for large systems

Conclusion

Vector clocks and 3-way merge are fundamental algorithms that power the distributed systems we use every day. From Git's elegant merge system to the conflict resolution in distributed databases, these concepts enable billions of devices to collaborate while maintaining consistency.

Understanding these algorithms deeply transforms how you think about distributed systems:

- Conflicts aren't errors - they're expected outcomes that need resolution strategies

- Time is relative - logical ordering matters more than wall-clock time

- Common ancestors are powerful - they enable intelligent, automatic merging

The next time you run git merge or watch your notes sync across devices, you'll know the elegant mathematics happening under the hood.

Want to discuss distributed systems, algorithms, or anything tech?